From Chatbots to Controlled AI Agents: Six Sigma Architecture for Capital Markets

Building enterprise-Ready AI Agents for Capital Markets

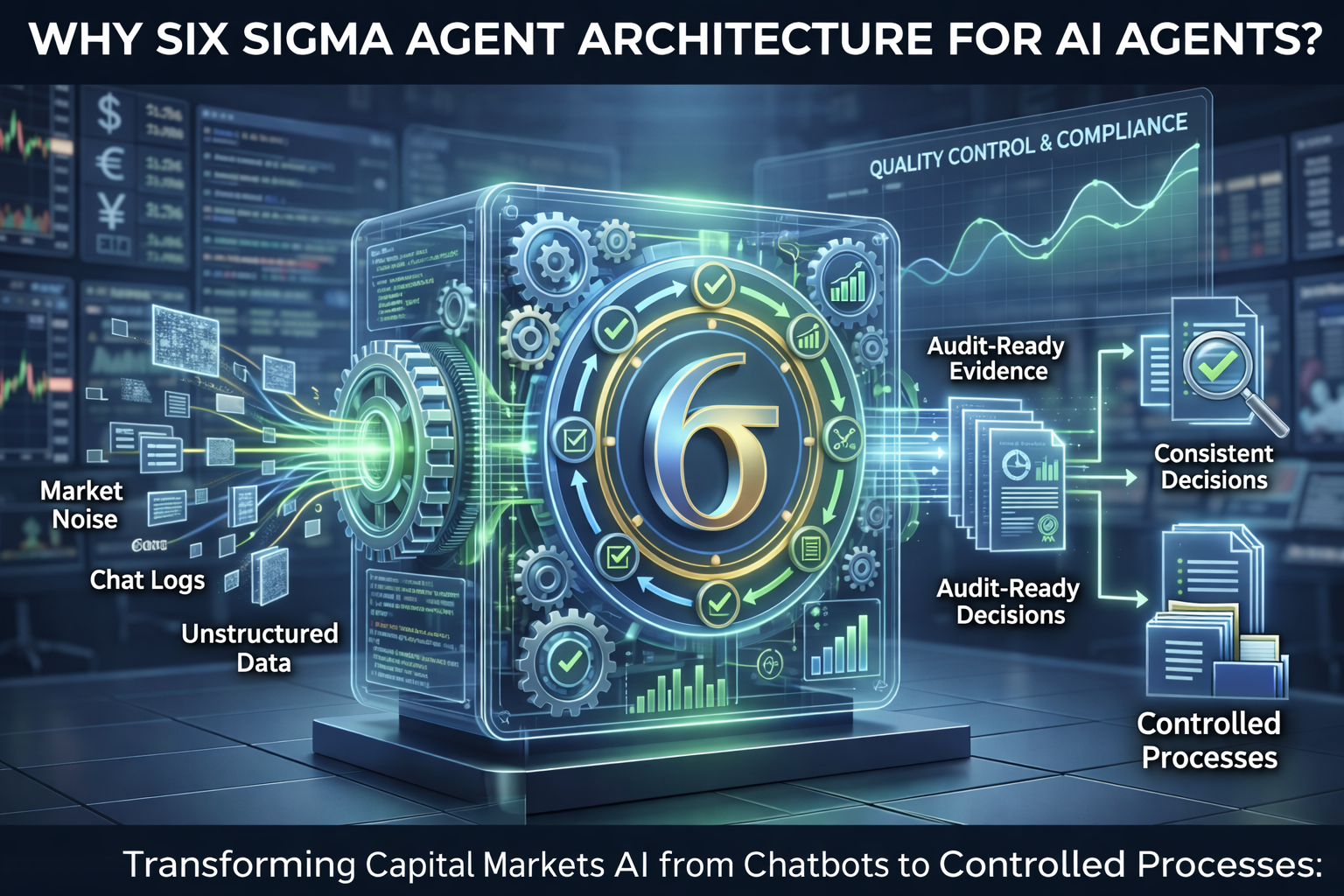

AI in capital markets is moving from “assistants” to “agents.” That sounds exciting until you ship it into trade surveillance, conduct risk, or escalation workflows, where every mistake becomes a compliance issue. At scale, the problem is not whether the model can write a good explanation. The problem is whether the system can produce consistent decisions, measurable performance, and audit-ready evidence, every day, across desks and venues.

That’s why agents in capital markets shouldn’t behave like chatbots. They should behave like controlled processes, with quality controls that look a lot like Six Sigma.

What “Six Sigma agent architecture” means in practice

Six Sigma agent architecture is designing AI agents as controlled, measurable workflows, so decisions are repeatable, traceable, and auditable.

The main features of controlled agents in surveillance and escalation

If you want agents you can trust in production, these are the features that matter most.

1) Clear decision boundaries

A controlled agent has an explicit scope: what it can do, what it can recommend, and what it must escalate. This prevents the “helpful but risky” behavior you see in chatbot-style agents.

Example: the agent can recommend an abuse typology and route a case, but cannot close a case without human approval.

2) Measurable outputs, not just narratives

Chatbots optimize for plausible language. Controlled agents optimize for measurable outputs: scores, labels, evidence references, and structured rationales.

Example: each alert includes a signal breakdown, confidence score, key contributing features, and a consistent case summary template.

3) Evidence-first reasoning with traceability

In capital markets, the question is always “based on what.” Controlled agents prioritize retrieval and evidence linking, so every conclusion is backed by data and sources.

Example: the agent’s rationale points to the exact order/execution sequences, timestamps, and derived features that triggered the detection.

4) Root-cause analysis and drift monitoring

Most teams focus on alert volume. Controlled agents focus on why alerts fail: false positives, false negatives, data gaps, and regime changes.

Example: weekly analysis of false positives by venue, product, and volatility regime, plus drift detection when feature distributions shift.

5) Controlled improvement, not prompt tweaking

You do not want an agent’s behavior changing silently because someone adjusted a prompt. Controlled agents use versioning, approvals, tests, and before/after evaluation.

Example: every model or rule update has an owner, a test set, acceptance thresholds, and a rollback path.

6) Human-in-the-loop where risk is real

Capital markets workflows have hard accountability boundaries. Controlled agents keep humans in the loop at escalation points, while still compressing investigation time.

Example: the agent prepares the evidence pack and recommendation, but a compliance officer approves escalation to higher risk tiers.

7) Deterministic workflow around probabilistic models

The models can be probabilistic. The workflow should be deterministic. That means the same inputs follow the same steps, producing predictable artifacts for audit and monitoring.

Example: fixed pipeline: detect → score → retrieve evidence → summarize → route → escalate if thresholds are met.

8) Regulator-ready audit trails by design

Auditability is not a report you generate later. It’s a property of the system. Controlled agents store the full chain: inputs, evidence, model versions, thresholds, and decisions.

Example: recreate exactly why a case was escalated six months ago, with the exact versions used at the time.

However, most “agentic AI” today ships without quality control

The trap is thinking “agentic” means “autonomous.” In regulated environments, autonomy without controls is a liability. Many teams deploy agents as conversational layers on top of tools, with inconsistent outputs, partial logging, and informal iteration. It works in demos, then breaks in production: false positives rise, analysts lose trust, and audits become painful because the system cannot reproduce its own decisions.

In capital markets, you don’t need agents that sound confident. You need agents that behave consistently.

Our point of view at KAWA

We apply Six Sigma principles to agentic AI in trading surveillance and risk escalation because the operating model matters more than the model.

KAWA agents are built as controlled processes:

- detect market abuse patterns with AI agents

- measure every decision, signal, and confidence score

- analyze root causes and drift, not just alert counts

- improve models and rules through controlled, auditable changes

- keep humans in the loop where risk is real

The goal is not to replace investigators. It’s to reduce noise, compress investigation cycles, and produce the kind of evidence trail that stands up to scrutiny.

Practical outcomes teams can repeat internally

When you treat agents as controlled processes, you get outcomes that compound over time:

- Fewer false positives because errors are measured and systematically reduced

- Faster investigations because evidence gathering and summarization are structured

- Regulator-ready audit trails because every decision is traceable and reproducible

- Safer production deployment because scope, approvals, and versions are controlled

- More trust from stakeholders because behavior is consistent, not “model mood dependent”

- Continuous improvement because drift and failure modes are treated as first-class signals

Conclusion

Agentic AI in capital markets is inevitable. The question is whether it becomes an asset or a liability. If agents behave like chatbots, you get inconsistency and compliance risk. If agents behave like controlled processes, you get measurable quality, faster investigations, and audit-ready decisioning at scale.

In high-stakes environments, quality control is the product. Six Sigma agent architecture is how you make agents trustworthy in production.